Fairness Indicators

Fairness Indicators is a tool that aids developers and teams in assessing, enhancing, and comparing machine learning models from a fairness perspective. This project is part of the robust TensorFlow toolkit, which provides numerous resources for machine learning tasks.

Key Features of Fairness Indicators

Fairness Indicators is primarily used to compute fairness metrics in both binary and multiclass classification models. These features are essential for those working with large datasets and model deployments, particularly in systems with millions or billions of users, such as those employed by Google.

Some of the key abilities of Fairness Indicators include:

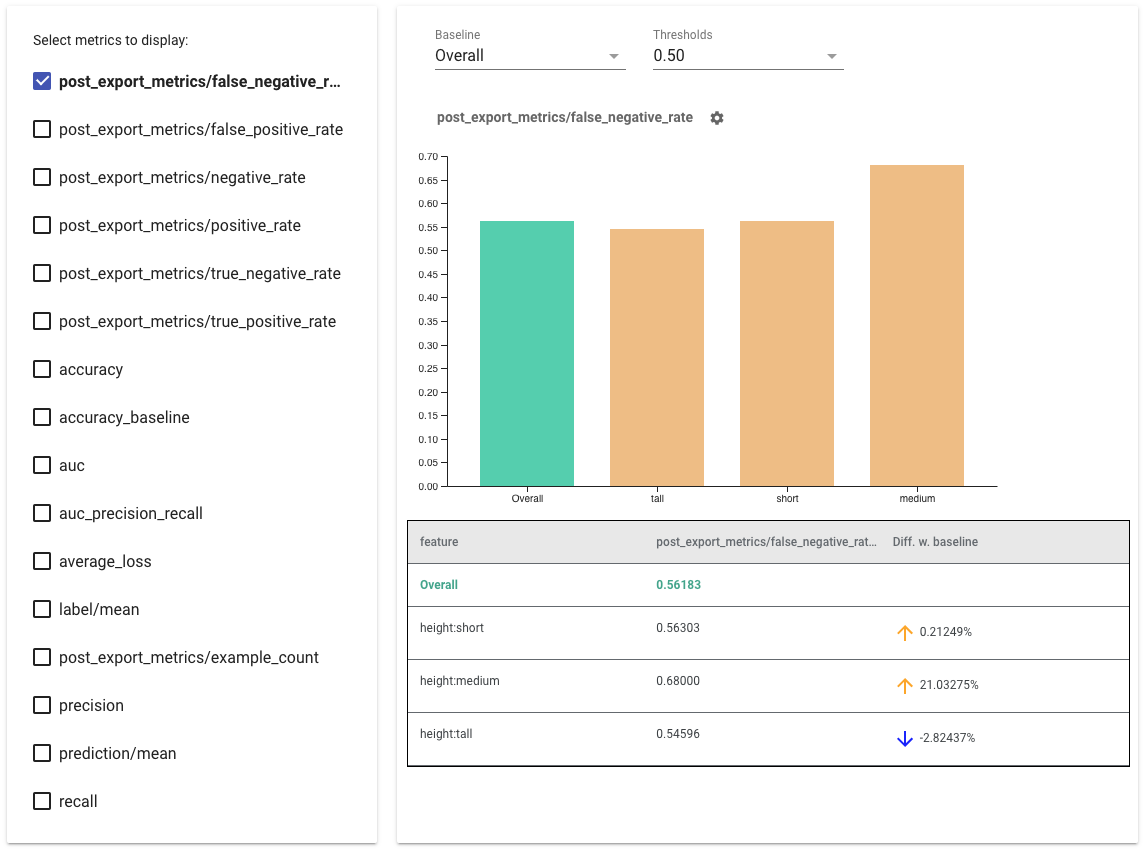

- Data Distribution Evaluation: It allows users to evaluate how data is distributed across different metrics and categories.

- Model Performance Analysis: The tool supports performance evaluations across different user-defined groups, helping to ensure models perform well for all segments.

- Exploration of Data Slices: Users can delve into specific data slices, identify root causes of issues, and explore improvement opportunities.

One detailed case study is available, complete with videos and exercises, showcasing how Fairness Indicators can be leveraged to gauge fairness over time in your projects.

Installation and Usage

To integrate Fairness Indicators into your workflow, you can simply install it via pip:

pip install fairness-indicators

The package includes components from TensorFlow Data Validation and TensorFlow Model Analysis, with special features for fairness metrics and a performance comparison tool across data slices. Additionally, for an enhanced interactive experience, there's the What-If Tool which can further scrutinize your models.

Nightly Packages

For those interested in the latest updates, nightly packages are available at:

pip install --extra-index-url https://pypi-nightly.tensorflow.org/simple fairness-indicators

This includes updates for essential dependencies such as TFDV and TFMA.

Integrations with TensorFlow Tools

Although Fairness Indicators is part of the larger TensorFlow suite:

- It can be accessed via the Evaluator component in TensorFlow Extended.

- It can be used with TensorBoard to evaluate real-time metrics.

For those not already utilizing TensorFlow tools, Fairness Indicators can still be effective. The tool integrates well with TFMA as a standalone, and it supports model-agnostic evaluations regardless of the specific model outputs.

Example Use Cases

The Examples directory houses several interesting use cases, including:

- Colaboratory Notebooks: These notebooks give a comprehensive overview of how Fairness Indicators can be used with real datasets.

- TensorFlow Hub: Demonstrations include evaluating models trained on various text embeddings, showcasing flexibility in application.

- TensorBoard Visualization: Guidance on visualizing fairness metrics directly within TensorBoard.

More Information and Compatible Versions

For additional guidance on fairness evaluation tailored to your specific context, a detailed guidance document is available. Furthermore, if any issues arise, you are encouraged to report them on the official GitHub issues page.

Lastly, a compatibility table ensures users can identify which package versions work seamlessly together. This is crucial for maintaining system stability and achieving reliable analysis results in diverse environments.